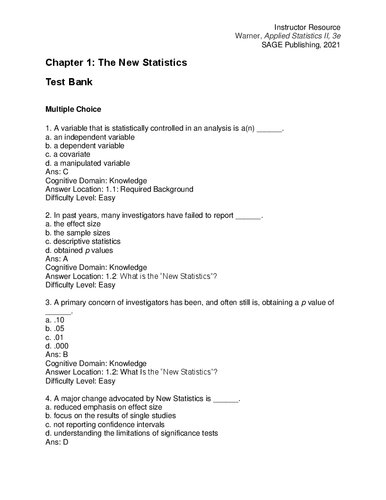

Applied Statistics II Multivariable and Multivariate Techniques Test Bank 3rd Edition by Rebecca Warner ISBN 9781544398723 1544398727

$50.00 Original price was: $50.00.$25.00Current price is: $25.00.

Applied Statistics II Multivariable and Multivariate Techniques Test Bank 3rd Edition by Rebecca Warner – Ebook PDF Instant Download/Delivery: 9781544398723 ,1544398727

Full download Applied Statistics II Multivariable and Multivariate Techniques Test Bank 3rd Edition after payment

Product details:

ISBN 10: 1544398727

ISBN 13: 9781544398723

Author: Rebecca Warner

Applied Statistics II Multivariable and Multivariate Techniques Test Bank 3rd Edition Table of contents:

Chapter 1 • The New Statistics

1.1 Required Background

1.2 What Is the “New Statistics”?

1.3 Common Misinterpretations of p Values

1.4 Problems With NHST Logic

1.5 Common Misuses of NHST

1.5.1 Violations of Assumptions

1.5.2 Violations of Rules for Use of NHST

1.5.3 Ignoring Artifacts and Data Problems That Bias p Values

1.5.4 Summary

1.6 The Replication Crisis

1.7 Some Proposed Remedies for Problems With NHST

1.7.1 Bayesian Statistics

1.7.2 Replace α = .05 with α = .005

1.7.3 Less Emphasis on NHST

1.8 Review of Confidence Intervals

1.8.1 Review: Setting Up CIs

1.8.2 Interpretation of CIs

1.8.3 Graphing CIs

1.8.4 Understanding Error Bar Graphs

1.8.5 Why Report CIs Instead of, or in Addition to, Significance Tests?

1.9 Effect Size

1.9.1 Generalizations About Effect Sizes

1.9.2 Test Statistics Depend on Effect Size Combined With Sample Size

1.9.3 Using Effect Size to Evaluate Theoretical Significance

1.9.4 Use of Effect Size to Evaluate Practical or Clinical Importance (or Significance)

1.9.5 Uses for Effect Sizes

1.10 Brief Introduction to Meta-Analysis

1.10.1 Information Needed for Meta-Analysis

1.10.2 Goals of Meta-Analysis

1.10.3 Graphic Summaries of Meta-Analysis

1.11 Recommendations for Better Research and Analysis

1.11.1 Recommendations for Research Design and Data Analysis

1.11.2 Recommendations for Authors

1.11.3 Recommendations for Journal Editors and Reviewers

1.11.4 Recommendations for Teachers of Research Methods and Statistics

1.11.5 Recommendations About Institutional Incentives and Norms

1.12 Summary

Chapter 2 • Advanced Data Screening: Outliers and Missing Values

2.1 Introduction

2.2 Variable Names and File Management

2.2.1 Case Identification Numbers

2.2.2 Codes for Missing Values

2.2.3 Keeping Track of Files

2.2.4 Use Different Variable Names to Keep Track of Modifications

2.2.5 Save SPSS Syntax

2.3 Sources of Bias

2.4 Screening Sample Data

2.4.1 Data Screening Need in All Situations

2.4.2 Data Screening for Comparison of Group Means

2.4.3 Data Screening for Correlation and Regression

2.5 Possible Remedy for Skewness: Nonlinear Data Transformations

2.6 Identification of Outliers

2.6.1 Univariate Outliers

2.6.2 Bivariate and Multivariate Outliers

2.7 Handling Outliers

2.7.1 Use Different Analyses: Nonparametric or Robust Methods

2.7.2 Handling Univariate Outliers

2.7.3 Handling Bivariate and Multivariate Outliers

2.8 Testing Linearity Assumption

2.9 Evaluation of Other Assumptions Specific to Analyses

2.10 Describing Amount of Missing Data

2.10.1 Why Missing Values Create Problems

2.10.2 Assessing Amount of Missingness Using SPSS Base

2.10.3 Decisions Based on Amount of Missing Data

2.10.4 Assessment of Amount of Missingness Using SPSS Missing Values Add-On

2.11 How Missing Data Arise

2.12 Patterns in Missing Data

2.12.1 Type A and Type B Missingness

2.12.2 MCAR, MAR, and MNAR Missingness

2.12.3 Detection of Type A Missingness

2.12.4 Detection of Type B Missingness

2.13 Empirical Example: Detecting Type A Missingness

2.14 Possible Remedies for Missing Data

2.15 Empirical Example: Multiple Imputation to Replace Missing Values

2.16 Data Screening Checklist

2.17 Reporting Guidelines

2.18 Summary

Appendix 2A: Brief Note About Zero-Inflated Binomial or Poisson Regression

Chapter 3 • Statistical Control: What Can Happen When You Add a Third Variable?

3.1 What Is Statistical Control?

3.2 First Research Example: Controlling for a Categorical X2 Variable

3.3 Assumptions for Partial Correlation Between X1 and Y, Controlling for X2

3.4 Notation for Partial Correlation

3.5 Understanding Partial Correlation: Use of Bivariate Regressions to Remove Variance Predictable by X2 From Both X1 and Y

3.6 Partial Correlation Makes No Sense if There Is an X1 × X2 Interaction

3.7 Computation of Partial r From Bivariate Pearson Correlations

3.8 Significance Tests, Confidence Intervals, and Statistical Power for Partial Correlations

3.8.1 Statistical Significance of Partial r

3.8.2 Confidence Intervals for Partial r

3.8.3 Effect Size, Statistical Power, and Sample Size Guidelines for Partial r

3.9 Comparing Outcomes for rY1.2 and rY1

3.10 Introduction to Path Models

3.11 Possible Paths Among X1, Y, and X2

3.12 One Possible Model: X1 and Y Are Not Related Whether You Control for X2 or Not

3.13 Possible Model: Correlation Between X1 and Y Is the Same Whether X2 Is Statistically Controlled or Not ( X2 Is Irrelevant to the X1, Y Relationship)

3.14 When You Control for X2, Correlation Between X1 and Y Drops to Zero

3.14.1 X1 and X2 Are Completely Redundant Predictors of Y

3.14.2 X1, Y Correlation Is Spurious

3.14.3 X1, Y Association Is Completely Mediated by X2

3.14.4 True Nature of the X1, Y Association (Their Lack of Association) Is “Suppressed” by X2

3.14.5 Empirical Results Cannot Determine Choice Among These Explanations

3.15 When You Control for X2, the Correlation Between X1 and Y Becomes Smaller (But Does Not Drop to Zero or Change Sign)

3.16 Some Forms of Suppression: When You Control for X2, r1Y.2 Becomes Larger Than r1Y or Opposite in Sign to r1Y

3.16.1 Classical Suppression: Error Variance in Predictor Variable X1 Is “Removed” by Control Variable X2

3.16.2 X1 and X2 Both Become Stronger Predictors of Y When BothAre Included in Analysis

3.16.3 Sign of X1 as a Predictor of Y Reverses When Controlling for X2

3.17 “None of the Above”

3.18 Results Section

3.19 Summary

Chapter 4 • Regression Analysis and Statistical Control

4.1 Introduction

4.2 Hypothetical Research Example

4.3 Graphic Representation of Regression Plane

4.4 Semipartial (or “Part”) Correlation

4.5 Partition of Variance in Y in Regression With Two Predictors

4.6 Assumptions for Regression With Two Predictors

4.7 Formulas for Regression With Two Predictors

4.7.1 Computation of Standard-Score Beta Coefficients

4.7.2 Formulas for Raw-Score (b) Coefficients

4.7.3 Formulas for Multiple R and Multiple R2

4.7.4 Test of Significance for Overall Regression: F Test for H0: R = 0

4.7.5 Test of Significance for Each Individual Predictor: t Test for H0: bi = 0

4.7.6 Confidence Interval for Each b Slope Coefficient

4.8 SPSS Regression

4.9 Conceptual Basis: Factors That Affect the Magnitude and Sign of β and b Coefficients in Multiple Regression With Two Predictors

4.10 Tracing Rules for Path Models

4.11 Comparison of Equations for β, b, pr, and sr

4.12 Nature of Predictive Relationships

4.13 Effect Size Information in Regression With Two Predictors

4.13.1 Effect Size for Overall Model

4.13.2 Effect Size for Individual Predictor Variables

4.14 Statistical Power

4.15 Issues in Planning a Study

4.15.1 Sample Size

4.15.2 Selection of Predictor and/or Control Variables

4.15.3 Collinearity (Correlation) Between Predictors

4.15.4 Ranges of Scores

4.16 Results

4.17 Summary

Chapter 5 • Multiple Regression With Multiple Predictors

5.1 Research Questions

5.2 Empirical Example

5.3 Screening for Violations of Assumptions

5.4 Issues in Planning a Study

5.5 Computation of Regression Coefficients With k Predictor Variables

5.6 Methods of Entry for Predictor Variables

5.6.1 Standard or Simultaneous Method of Entry

5.6.2 Sequential or Hierarchical (User-Determined) Method of Entry

5.6.3 Statistical (Data-Driven) Order of Entry

5.7 Variance Partitioning in Standard Regression Versus Hierarchical and Statistical Regression

5.8 Significance Test for an Overall Regression Model

5.9 Significance Tests for Individual Predictors in Multiple Regression

5.10 Effect Size

5.10.1 Effect Size for Overall Regression (Multiple R)

5.10.2 Effect Size for Individual Predictor Variables (sr2)

5.11 Changes in F and R as Additional Predictors Are Added to a Model in Sequential or Statistical Regression

5.12 Statistical Power

5.13 Nature of the Relationship Between Each X Predictor and Y (Controlling for Other Predictors)

5.14 Assessment of Multivariate Outliers in Regression

5.15 SPSS Examples

5.15.1 SPSS Menu Selections, Output, and Results for Standard Regression

5.15.2 SPSS Menu Selections, Output, and Results for Sequential Regression

5.15.3 SPSS Menu Selections, Output, and Results for Statistical Regression

5.16 Summary

Appendix 5A: Use of Matrix Algebra to Estimate Regression Coefficientsfor Multiple Predictors

5.A.1 Matrix Addition and Subtraction

5.A.2 Matrix Multiplication

5.A.3 Matrix Inverse

5.A.4 Matrix Transpose

5.A.5 Determinant

5.A.6 Using the Raw-Score Data Matrices for X and Y toCalculate b Coefficients

Appendix 5B: Tables for Wilkinson and Dallal (1981) Test ofSignificance of Multiple R2 in Forward Statistical Regression

Appendix 5C: Confidence Interval for R2

Chapter 6 • Dummy Predictor Variables in Multiple Regression

6.1 What Dummy Variables Are and When They Are Used

6.2 Empirical Example

6.3 Screening for Violations of Assumptions

6.4 Issues in Planning a Study

6.5 Parameter Estimates and Significance Tests for Regressions with Dummy Predictor Variables

6.6 Group Mean Comparisons Using One-Way Between-S ANOVA

6.6.1 Sex Differences in Mean Salary

6.6.2 College Differences in Mean Salary

6.7 Three Methods of Coding for Dummy Variables

6.7.1 Regression With Dummy-Coded Dummy Predictor Variables

6.7.2 Regression With Effect-Coded Dummy Predictor Variables

6.7.3 Orthogonal Coding of Dummy Predictor Variables

6.8 Regression Models That Include Both Dummy and Quantitative Predictor Variables

6.9 Effect Size and Statistical Power

6.10 Nature of the Relationship and/or Follow-Up Tests

6.11 Results

6.12 Summary

Chapter 7 • Moderation: Interaction in Multiple Regression

7.1 Terminology

7.2 Interaction Between Two Categorical Predictors: Factorial ANOVA

7.3 Interaction Between One Categorical and One Quantitative Predictor

7.4 Preliminary Data Screening: One Categorical and One Quantitative Predictor

7.5 Scatterplot for Preliminary Assessment of Possible Interaction Between Categorical and Quantitative Predictor

7.6 Regression to Assess Statistical Significance of Interaction Between One Categorical and One Quantitative Predictor

7.7 Interaction Analysis With More Than Three Categories

7.8 Example With Different Data: Significant Sex-by-Years Interaction

7.9 Follow-Up: Analysis of Simple Main Effects

7.10 Interaction Between Two Quantitative Predictors

7.11 SPSS Example of Interaction Between Two Quantitative Predictors

7.12 Results for Interaction of Age and Habits as Predictors of Symptoms

7.13 Graphing Interaction for Two Quantitative Predictors

7.14 Results Section for Interaction of Two Quantitative Predictors

7.15 Additional Issues and Summary

Appendix 7A: Graphing Interactions Between QuantitativeVariables “by Hand”

Chapter 8 • Analysis of Covariance

8.1 Research Situations for Analysis of Covariance

8.2 Empirical Example

8.3 Screening for Violations of Assumptions

8.4 Variance Partitioning in ANCOVA

8.5 Issues in Planning a Study

8.6 Formulas for ANCOVA

8.7 Computation of Adjusted Effects and Adjusted Y * Means

8.8 Conceptual Basis: Factors That Affect the Magnitude of SSAadj and SSresidual and the Pattern of Adjusted Group Means

8.9 Effect Size

8.10 Statistical Power

8.11 Nature of the Relationship and Follow-Up Tests: Information to Include in the “Results” Section

8.12 SPSS Analysis and Model Results

8.12.1 Preliminary Data Screening

8.12.2 Assessment of Assumption of No Treatment-by-Covariate Interaction

8.12.3 Conduct Final ANCOVA Without Interaction Term Between Treatment and Covariate

8.13 Additional Discussion of ANCOVA Results

8.14 Summary

Appendix 8A: Alternative Methods for the Analysis of Pretest–Posttest Data

8.A.1 Potential Problems With Gain or Change Scores

Chapter 9 • Mediation

9.1 Definition of Mediation

9.1.1 Path Model Notation

9.1.2 Circumstances in Which Mediation May Be a Reasonable Hypothesis

9.2 Hypothetical Research Example

9.3 Limitations of “Causal” Models

9.3.1 Reasons Why Some Path Coefficients May Be Not Statistically Significant

9.3.2 Possible Interpretations for Statistically Significant Paths

9.4 Questions in a Mediation Analysis

9.5 Issues in Designing a Mediation Analysis Study

9.5.1 Types of Variables in Mediation Analysis

9.5.2 Temporal Precedence or Sequence of Variables in Mediation Studies

9.5.3 Time Lags Between Variables

9.6 Assumptions in Mediation Analysis and Preliminary Data Screening

9.7 Path Coefficient Estimation

9.8 Conceptual Issues: Assessment of Direct Versus Indirect Paths

9.8.1 The Mediated or Indirect Path: ab

9.8.2 Mediated and Direct Path as Partition of Total Effect

9.8.3 Magnitude of Mediated Effect

9.9 Evaluating Statistical Significance

9.9.1 Causal-Steps Approach

9.9.2 Joint Significance Test

9.9.3 Sobel Test of H0: ab = 0

9.9.4 Bootstrapped Confidence Interval for ab

9.10 Effect Size Information

9.11 Sample Size and Statistical Power

9.12 Additional Examples of Mediation Models

9.12.1 Multiple Mediating Variables

9.12.2 Multiple-Step Mediated Paths

9.12.3 Mediated Moderation and Moderated Mediation

9.13 Note About Use of Structural Equation Modeling Programs to Test Mediation Models

9.14 Results Section

9.15 Summary

Chapter 10 • Discriminant Analysis

10.1 Research Situations and Research Questions

10.2 Introduction to Empirical Example

10.3 Screening for Violations of Assumptions

10.4 Issues in Planning a Study

10.5 Equations for Discriminant Analysis

10.6 Conceptual Basis: Factors That Affect the Magnitude of Wilks’ Λ

10.7 Effect Size

10.8 Statistical Power and Sample Size Recommendations

10.9 Follow-Up Tests to Assess What Pattern of Scores Best Differentiates Groups

10.10 Results

10.11 One-Way ANOVA on Scores on Discriminant Functions

10.12 Summary

Appendix 10A: The Eigenvalue/Eigenvector Problem

Appendix 10B: Additional Equations for Discriminant Analysis

Chapter 11 • Multivariate Analysis of Variance

11.1 Research Situations and Research Questions

11.2 First Research Example: One-Way MANOVA

11.3 Why Include Multiple Outcome Measures?

11.4 Equivalence of MANOVA and DA

11.5 The General Linear Model

11.6 Assumptions and Data Screening

11.7 Issues in Planning a Study

11.8 Conceptual Basis of MANOVA

11.9 Multivariate Test Statistics

11.10 Factors That Influence the Magnitude of Wilks’ Λ

11.11 Effect Size for MANOVA

11.12 Statistical Power and Sample Size Decisions

11.13 One-Way MANOVA: Career Group Data

11.14 2 × 3 Factorial MANOVA: Career Group Data

11.14.1 Follow-Up Tests for Significant Main Effects

11.14.2 Follow-Up Tests for Nature of Interaction

11.14.3 Further Discussion of Problems With This 2 × 3 Factorial Example

11.15 Significant Interaction in a 3 × 6 MANOVA

11.16 Comparison of Univariate and Multivariate Follow-Up Analyses

11.17 Summary

Chapter 12 • Exploratory Factor Analysis

12.1 Research Situations

12.2 Path Model for Factor Analysis

12.3 Factor Analysis as a Method of Data Reduction

12.4 Introduction of Empirical Example

12.5 Screening for Violations of Assumptions

12.6 Issues in Planning a Factor-Analytic Study

12.7 Computation of Factor Loadings

12.8 Steps in the Computation of PC and Factor Analysis

12.8.1 Computation of the Correlation Matrix R

12.8.2 Computation of the Initial Factor Loading Matrix A

12.8.3 Limiting the Number of Components or Factors

12.8.4 Rotation of Factors

12.8.5 Naming or Labeling Components or Factors

12.9 Analysis 1: PC Analysis of Three Items Retaining All Three Components

12.9.1 Finding the Communality for Each Item on the Basis of All Three Components

12.9.2 Variance Reproduced by Each of the Three Components

12.9.3 Reproduction of Correlations From Loadings on All Three Components

12.10 Analysis 2: PC Analysis of Three Items Retaining Only the First Component

12.10.1 Communality for Each Item on the Basis of One Component

12.10.2 Variance Reproduced by the First Component

12.10.3 Cannot Reproduce Correlations Perfectly From Loadings on Only One Component

12.11 PC Versus PAF

12.12 Analysis 3: PAF of Nine Items, Two Factors Retained, No Rotation

12.12.1 Communality for Each Item on the Basis of Two Retained Factors

12.12.2 Variance Reproduced by Two Retained Factors

12.12.3 Partial Reproduction of Correlations From Loadings on Only Two Factors

12.13 Geometric Representation of Factor Rotation

12.14 Factor Analysis as Two Sets of Multiple Regressions

12.14.1 Construction of Factor Scores for Each Individual (F1, F2, etc.)From Individual Item z Scores

12.14.2 Prediction of z Scores for Individual Participant (zXi)From Participant Scores on Factors (F1, F2, etc.)

12.15 Analysis 4: PAF With Varimax Rotation

12.15.1 Variance Reproduced by Each Factor at Three Stages in the Analysis

12.15.2 Rotated Factor Loadings

12.15.3 Example of a Reverse-Scored Item

12.16 Questions to Address in the Interpretation of Factor Analysis

12.17 Results Section for Analysis 4: PAF With Varimax Rotation

12.18 Factor Scores Versus Unit-Weighted Composites

12.19 Summary of Issues in Factor Analysis

Appendix 12A: The Matrix Algebra of Factor Analysis

Appendix 12B: A Brief Introduction to Latent Variables in SEM

Chapter 13 • Reliability, Validity, and Multiple-Item Scales

13.1 Assessment of Measurement Quality

13.1.1 Reliability

13.1.2 Validity

13.1.3 Sensitivity

13.1.4 Bias

13.2 Cost and Invasiveness of Measurements

13.2.1 Cost

13.2.2 Invasiveness

13.2.3 Reactivity of Measurement

13.3 Empirical Examples of Reliability Assessment

13.3.1 Definition of Reliability

13.3.2 Test-Retest Reliability Assessment for a Quantitative Variable

13.3.3 Interobserver Reliability Assessment for Scores on a Categorical Variable

13.4 Concepts From Classical Measurement Theory

13.4.1 Reliability as Partition of Variance

13.4.2 Attenuation of Correlations Due to Unreliability of Measurement

13.5 Use of Multiple-Item Measures to Improve Measurement Reliability

13.6 Computation of Summated Scales

13.6.1 Assumption: All Items Measure the Same Construct and Are Scored in the Same Direction

13.6.2 Initial (Raw) Scores Assigned to Individual Responses

13.6.3 Variable Naming, Particularly for Reverse-Worded Questions

13.6.4 Factor Analysis to Assess Dimensionality of a Set of Items

13.6.5 Recoding Scores for Reverse-Worded Items

13.6.6 Summing Scores Across Items to Compute a Total Score: Handling Missing Data

13.6.7 Comparison of Unit-Weighted Summed Scores Versus Saved Factor Scores

13.7 Assessment of Internal Homogeneity for Multiple-Item Measures: Cronbach’s Alpha Reliability Coefficient

13.7.1 Conceptual Basis of Cronbach’s Alpha

13.7.2 Empirical Example: Cronbach’s Alpha for Five Selected CES-D Items

13.7.3 Improving Cronbach’s Alpha by Dropping a “Poor” Item

13.7.4 Improving Cronbach’s Alpha by Increasing the Number of Items

13.7.5 Other Methods of Reliability Assessment for Multiple-Item Measures

13.8 Validity Assessment

13.8.1 Content and Face Validity

13.8.2 Criterion-Oriented Validity

13.8.3 Construct Validity: Summary

13.9 Typical Scale Development Process

13.9.1 Generating and Modifying the Pool of Items or Measures

13.9.2 Administer the Survey to Participants

13.9.3 Factor-Analyze Items to Assess the Number and Nature of Latent Variables or Constructs

13.9.4 Development of Summated Scales

13.9.5 Assess Scale Reliability

13.9.6 Assess Scale Validity

13.9.7 Iterative Process

13.9.8 Create the Final Scale

13.10 A Brief Note About Modern Measurement Theories

13.11 Reporting Reliability

13.12 Summary

Appendix 13A: The CES-D

Appendix 13B: Web Resources on Psychological Measurement

Chapter 14 • More About Repeated Measures

14.1 Introduction

14.2 Review of Assumptions for Repeated-Measures ANOVA

14.3 First Example: Heart Rate and Social Stress

14.4 Test for Participant-by-Time or Participant-by-Treatment Interaction

14.5 One-Way Repeated-Measures Results for Heart Rate and Social Stress Data

14.6 Testing the Sphericity Assumption

14.7 MANOVA for Repeated Measures

14.8 Results for Heart Rate and Social Stress Analysis Using MANOVA

14.9 Doubly Multivariate Repeated Measures

14.10 Mixed-Model ANOVA: Between-S and Within-S Factors

14.10.1 Mixed-Model ANOVA for Heart Rate and Stress Study

14.10.2 Interaction of Intervention Type and Times of Assessment in Hypothetical Experiment With Follow-Up

14.10.3 First Follow-Up: Simple Main Effect (Across Time) for Each Intervention

14.10.4 Second Follow-Up: Comparisons of Intervention Groups at the Same Points in Time

14.10.5 Comparison of Repeated-Measures ANOVA With Difference-Score and ANCOVA Approaches

14.11 Order and Sequence Effects

14.12 First Example: Order Effect as a Nuisance

14.13 Second Example: Order Effect Is of Interest

14.14 Summary and Other Complex Designs

Chapter 15 • Structural Equation Modeling With Amos: A Brief Introduction

15.1 What Is Structural Equation Modeling?

15.2 Review of Path Models

15.3 More Complex Path Models

15.4 First Example: Mediation Structural Model

15.5 Introduction to Amos

15.6 Screening and Preparing Data for SEM

15.6.1 SEM Requires Large Sample Sizes

15.6.2 Evaluating Assumptions for SEM

15.7 Specifying the Structural Equation Model (Variable Names and Paths)

15.7.1 Drawing the Model Diagram

15.7.2 Open SPSS Data File and Assign Names to Measured Variables

15.8 Specify the Analysis Properties

15.9 Running the Analysis and Examining Results

15.10 Locating Bootstrapped CI Information

15.11 Sample Results Section for Mediation Example

15.12 Selected Structural Equation Model Terminology

15.13 SEM Goodness-of-Fit Indexes

15.14 Second Example: Confirmatory Factor Analysis

15.14.1 General Characteristics of CFA

15.15 Third Example: Model With Both Measurement and Structural Components

15.16 Comparing Structural Equation Models

15.16.1 Comparison of Nested Models

15.16.2 Comparison of Non-Nested Models

15.16.3 Comparisons of Same Model Across Different Groups

15.16.4 Other Uses of SEM

15.17 Reporting SEM

15.18 Summary

Chapter 16 • Binary Logistic Regression

16.1 Research Situations

16.1.1 Types of Variables

16.1.2 Research Questions

16.1.3 Assumptions Required for Linear Regression Versus Binary Logistic Regression

16.2 First Example: Dog Ownership and Odds of Death

16.3 Conceptual Basis for Binary Logistic Regression Analysis

16.3.1 Why Ordinary Linear Regression Is Inadequate When Outcome Is Categorical

16.3.2 Modifying the Method of Analysis to Handle a Binary Categorical Outcome

16.4 Definition and Interpretation of Odds

16.5 A New Type of Dependent Variable: The Logit

16.6 Terms Involved in Binary Logistic Regression Analysis

16.6.1 Estimation of Coefficients for a Binary Logistic Regression Model

16.6.2 Assessment of Overall Goodness of Fit for a Binary Logistic Regression Model

16.6.3 Alternative Assessments of Overall Goodness of Fit

16.6.4 Information About Predictive Usefulness of Individual Predictor Variables

16.6.5 Evaluating Accuracy of Group Classification

16.7 Logistic Regression for First Example: Prediction of Death From Dog Ownership

16.7.1 SPSS Menu Selections and Dialog Boxes

16.7.2 SPSS Output

16.7.3 Results for the Study of Dog Ownership and Death

16.8 Issues in Planning and Conducting a Study

16.8.1 Preliminary Data Screening

16.8.2 Design Decisions

16.8.3 Coding Scores on Binary Variables

16.9 More Complex Models

16.10 Binary Logistic Regression for Second Example: Drug Dose and Sex as Predictors of Odds of Death

16.11 Comparison of Discriminant Analysis With Binary Logistic Regression

16.12 Summary

Chapter 17 • Additional Statistical Techniques

17.1 Introduction

17.2 A Brief History of Developments in Statistics

17.3 Survival Analysis

17.4 Cluster Analyses

17.5 Time-Series Analyses

17.5.1 Describing a Single Time Series

17.5.2 Interrupted Time Series: Evaluating Intervention Impact

17.5.3 Cycles in Time Series

17.5.4 Coordination or Interdependence Between Time Series

17.6 Poisson and Binomial Regression for Zero-Inflated Count Data

17.7 Bayes’ Theorem

17.8 Multilevel Modeling

17.9 Some Final Words

Glossary

References

Index

People also search for Applied Statistics II Multivariable and Multivariate Techniques Test Bank 3rd Edition:

what is multivariate techniques

multivariate vs multivariable

multivariable mathematics

multivariable and vector calculus

Tags:

Rebecca Warner,Statistics II,Multivariable,Multivariate Techniques

You may also like…

Computers - Computer Certification & Training

Mathematics - Applied Mathematics

Physics - Mechanics

Mechanics II for JEE Advanced 3rd Edition by Sharma 9789353503741 9353503744

Mathematics - Mathematical Statistics

Applied and Computational Statistics 1st Edition by Sorana Bolboaca ISBN 3039281763 9783039281763

Uncategorized

International Debt Statistics 2017 1st Edition by World Bank 1464809941 978-1464809941

Uncategorized

Medicine - Others